Management consultants are always looking for ugly problems and broken things to fix. After all, we only get paid when we uncover difficult problems and fix them.

Clients do not always know what is wrong

What really surprises me is that many clients have trouble explaining what is exactly wrong and what they want done. They often talk about symptoms – flat revenues, dropping margins, or increased receivables – not the root causes. As a result, much of the burden of scoping the project often falls on the consultant’s shoulders.

There are a number of diagnostic tools that consulting firms have created over the years to help identify problem areas: SWOT, benchmarks, McKinsey 7S, pricing waterfalls, financial analysis, BCG growth matrix, surveys, workshops and even simple checklists. Borrowing the analogy of consultants as business doctors, these tools are like the x-ray, thermometer, blood pressure gauge or blood tests used in a physical exam. It is not treatment, just the diagnostics to find the sickness.

Maturity model basics

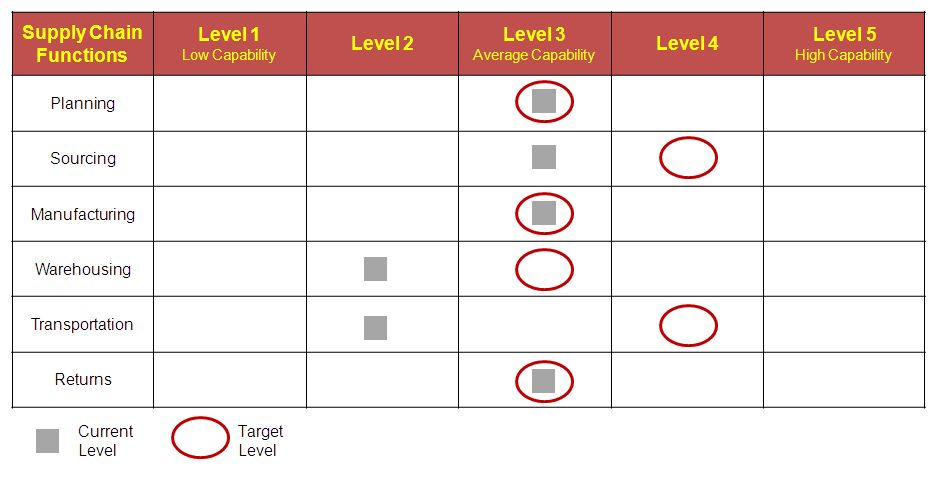

A common tool is the maturity model which gauges the client’s maturity in a number of areas and points out the areas of improvement. It’s actually a simple thing that often looks like a report card or an excel table. It looks simple, but there is good stuff there. In the example below, the different functions / capabilities are shown on the left and the different maturity levels are on top. So in the first row, the supply chain planning group is performing at a level 3, which for this client, is about where they want to be.

Here are some common questions people have about maturity models:

Here are some common questions people have about maturity models:

#1: Shouldn’t the target be level 5 (highest capability) for everything?

Probably not. Getting to level 5 (highest maturity) is usually prohibitively expensive, or potentially impossible. How difficult will it be to be as efficient as Southwest, as customer-driven as Nordstrom, and innovative as Apple? Probably smarter to choose the areas you want to really excel, and pick your battles

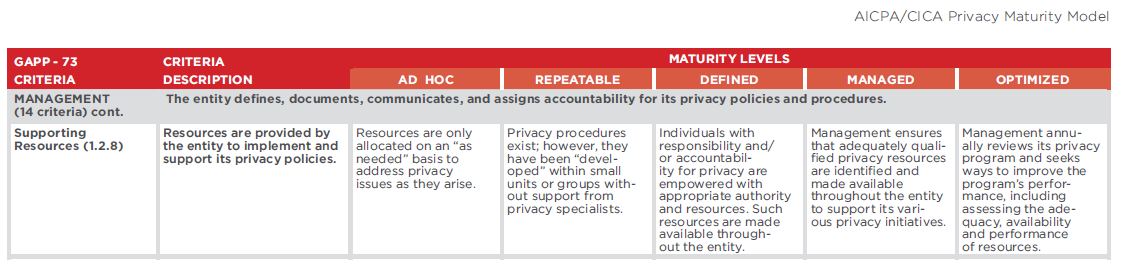

#2: What is the criteria for the maturity levels?

The criteria is set ahead of time. The consultant has a “description” for each of the boxes in the grid. So, there is a definition for planning L1, L2, L3, L4, L5. It can be laborious putting it together, but without clear definitions, the bucketing of performance will not have meaning. Click on the graphic below to see the detail or see the entire AICPA / CICA Privacy Maturity Model (March 2011) here.

#3: Isn’t this all just subjective opinion?

Yes . . . There will be some subjective elements and room for interpretation. In the AICPA example above, what does “adequate and qualified” privacy resources mean. Is that a chief risk officer with a PhD or 2 college interns with online training?

- . . . and no. a) Many of the maturity models are industry-specific (e.g., healthcare vs, automotive) so it can be more detailed and relevant. b) Good maturity models are based off of benchmark or survey data, so there is quantitative data to back up the definitions. c) Finally, consultants survey a large enough group of people (e.g., executives, senior managers, line workers etc) so that the results are representative. Don’t want to simply survey the CEO and keep that as gospel.

#4: Who decides the current level of performance?

The consultant can decide . . . The team can assess the client’s maturity (based on interviews, data analysis, comparison to competitors) and present it to the client. If you have fine-tuned with the client, your assessment will be 80% right.

- . . . or the client can decide. You can poll the stakeholders ahead of time with an online survey or give them scorecards and ask them to self-evaluate during the middle of a workshop. Both work well. The hardest part is describing the maturity levels in a coherent and succinct way. If not, it can get really boring, really quickly.

#5: Isn’t this a big marketing tool?

Of course. This stuff works. Take a look at Accenture’s Green Maturity Assessment online survey tool. Very slick and easy to use. It has the added benefit of collecting “baseline” data (company size, geography, performance) which creates more data points to compare future survey respondents too.

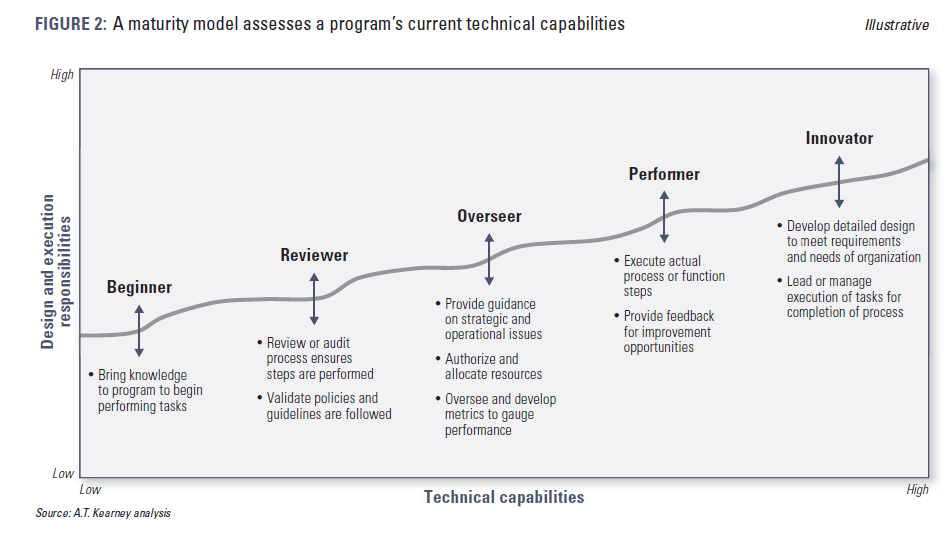

#6: Are there other formats of maturity models?

Yes. AT Kearney has a maturity model that looks like a stair-step.

#7: What happens after the maturity model?

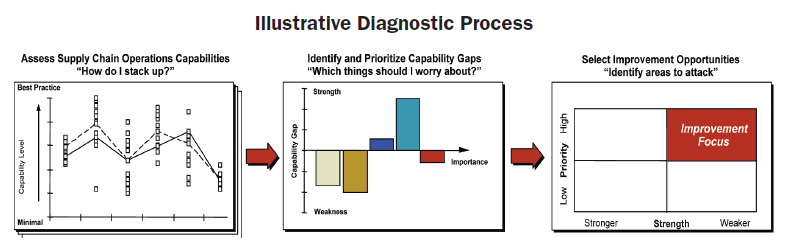

Prioritize the opportunities. Maturity models are usually the first step in a larger opportunity identification process. The Booz Allen Supply Chain Maturity Model white paper shows this process clearly.

#8: What else are maturity models good for?

Structuring the problem. One of the most insightful parts of the maturity model is not the detailed description and words, but it is the high-level structure or the “buckets” of capabilities that are being evaluated. Usually, the consulting firms have spent a lot of time and heart-ache to pick the categories that are most relevant and also MECE (mutually exclusive, collectively exhaustive). It helps to frame the discussion and ask the right questions, which is half of a consultant’s job.

Related Posts:

- Why consultants love best practices

- How consultants interview clients

- DMAIC: A great consulting tool for process improvement

- 4 Reasons Why Management Consultants Love Data

Photo credit: Flickr, Ernstl, AICPA website, Accenture website, AT Kearney website, and Booz Allen website

I think it is a good capture of CMM as a whole in one place. It saved me a lot of time. From a consulting point of view and the tools, one thing I would think is required has to do with addressing the fundamental changes our companies are having to go through because of social, mobile and shared economies of scale. These need consideration at industry level – sort of like reworking the good old porter model for the CEOs and then dive down to ingrained processes and their relevance and revitalization.

Thanks for the comment. Yes, I agree maturity models are a useful to an extent. It is a way to compare a current (and often times chaotic) situation against several factors (e.g., comparing a soccer team against passing, shooting, scoring), but it is inherently subjective. The factors you choose and how strictly you define the top level (excellent) affect the outcome. How the client self-evaluates themselves is subjective too. They might over/under-rate themselves depending on the framing of the project, and how the survey nudges them to answer.

For S0-MO-LO (social, mobile, local), agree that many “frameworks” are a bit rigid and show 1 point it time. In my humble opinions, frameworks are useful to give a current state assessment, they are diagnostic, not therapeutic. For clients or consultants who believe that maturity models are the answer, they are regressing to the mean. They are looking backwards, not forwards. Red ocean.

Implementing and mastering a process can mean many things – it could be to introduce a new concept or incremental improvements to a project team. It could involve rolling out an entirely new set of competencies to a team, from strategic-planning to time-reporting.

Agreed.

–Are CMM still being used? They don’t seem to have many current references since about 2012. Have they fallen out of favor?

–What are there strengths and weaknesses?

–Do clients put the results to good use? What follow-up or info-graphics help?

–I am developing one for energy efficiency implementation in research buildings: stakeholder and system maturity, about 8 categories.

–Are there on-line tools I can compare the experience of a participant, and feedback or interactive nature?

–Is it a benchmarking tool to compare at a later time?

Are maturity models still used? Definitely. It is a great way to evaluate a client’s situation using pseudo-objective criteria, and providing a nice clean table to organize the categories, drivers. If your case, perhaps (energy conservation, fixed cost, variable cost, operational complexity etc).

Strengths / weakness – too long to list.

Do clients put it to use – 80/20. Only 20% of recommendations are implemented fully (in my crass estimation).

Online tools – not sure what that means.

Yes, if you can collect a sample size large enough, will be a HUGE resource for you longer-term.

Hope that is helpful, and encouraging.

Thanks for the reply. Relieved to know our design steering committee has a good chance of success.

Right now, our most pressing issue is making an on-line or Excel response platform that can be used by respondents. The Google Sheet revisions have become cumbersome, so we are looking for a more locked-in platform, cloud based that would record responses confidentially, and the content could be updated occasionally. Does that make sense? I would be grateful to discuss this on the phone if you are available. You can find me in the UC Davis directory.

Hello. You will want to use qualtrics or survey monkey or something to collect survey information anonymously.

Alternatively, you can create a tableau web-based portal – but that requires some tableau licenses and lite “programming”.

In the short run – go with a free survey tool and periodically “drop” the results into chart form.

See what adoption looks like (how big is your n), before you (over)invest.

My thought.

We tried the Google spreadsheets and they got too complicated, AND that is good advice about not over investing.

Ahah… Tableau. I was not familiar with that platform, and it looks promising, though I don’t know the costs, capabilities or learning curve. Are you available to discuss your experiences with it?

Sorry for being elusive. This is an anonymous blog for now. Email me at consultantsmind1 AT gmail.com, if you like.